Metrics Matter: Why Security Needs to Move From Trust to Evidence

Metrics Matter: Why Security Needs to Move From Trust to Evidence

In security, trust has traditionally been the fallback:

“We trust our scanners are tuned.”

“We trust developers will fix what matters.”

“We trust our dashboards reflect reality.”

But as most AppSec folks know this falls apart under scrutiny more often than not. Tools flag the same issue 500 times. Findings go stale since devs have better things to do and tune them out. Remediation dashboards quietly drift into irrelevance -- I've personally created irrelevant dashboards that were looked at maybe for a week.

At Smithy, we learned and have come to believe that security should be measurable, observable, and improvable — just like everything else in modern engineering.

The Findings Problem — And What Happens When You Track It

The Smithy team is all about transparency:

- We build in the open with the core data structure AND the SDK being open source.

- We publish our changelog every week. Furthermore, our PoCs all start with the same question: "What numbers are you aiming to achieve? No matter how unrealistic they may be." This makes all our users think hard about what they want and focuses the PoC to measurable outcomes.

Example:

One of the Smithy benefits is noise detection. For every PoC we find a way to track the delta between findings vs noise during the PoC. We prefer in downstream systems for better integration but we also report on our frontend as a backup. On a recent engagement, we tracked how Smithy, over time, learned to automatically suppress false positives based on organizational patterns — and reduced noise by 99.2% in a real-world deployment for a major internet provider. Of course this is a singular use case which could mean just a good fit, hence why there's no case study for it.

But this isn't magic. It is simple math. Smithy achieved this in the following way:

- Removed historical duplicates.

- Removed duplicates across multiple tools.

- Removed findings without exploits.

- Removed unreachables.

- Watched which findings were fixed.

- Correlated untouched findings with context: repo, author, PR pattern, scanner, severity.

- Over time, we learned which types of findings never lead to action — and enabled features that suppressed them (e.g. CWEs related to TLS in a service that is internal only, or low and medium findings against a service that is of low business criticality).

With no ML ops, just rules and evidence, we cut noise from 2.5k of alerts per scan to 2 a reduction of 99.2% To make this information clear we show the silenced/removed results in Smithy itself. In the findings list you can select to see findings with a designation of "duplicate", "unexploitable" and "unreachable" among many other statuses.

Why Metrics Like This Matter

Security often talks about risk, but isn't clear what risk is. In order for it to decrease, it should be measurable first. You can only measure what you are clear about.

That’s because most teams don’t track:

- Percentage of issues triaged and fixed

- Time-to-fix vs time-to-ignore

- Tool trustworthiness (false positive rate)

- Developer feedback loops

Smithy flips that model by:

- Standardizing all security signals into OCSF

- Observing what happens after alerts are created

- Filtering or prioritizing future alerts based on real outcomes

- Surfacing this data back to security and dev teams in real time

The result is evidence-based security — built on what actually happens, not what should happen.

The Takeaway: If You Can’t Measure It, You Can’t Improve It

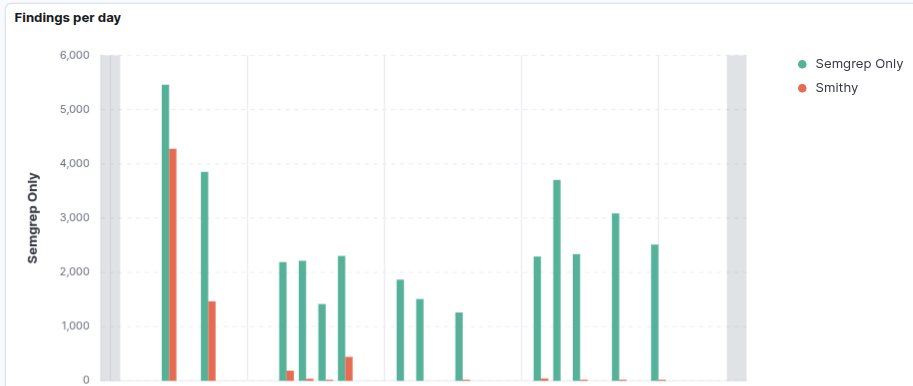

The screenshot below tells the story in one image:

Smithy reduced false positives over time by learning what your teams really act on.

This was after a week of scanning with open source and free semgrep (what the contact already had) vs semgrep running through smithy with noise reduction turned on.

This isn’t about replacing scanners.

It’s about orchestrating them with feedback, context, and memory.

It’s about moving away from dashboards that say “trust me” and toward systems that prove what works.

Want to Try It?

Smithy is open-core.

You can deploy the basic engine, connect your tools, and start tracking what your team acts on — and what they ignore — today.

Want support, advanced prioritisation, noise reduction, enrichment or remediation?

Talk to us about SaaS or On-Prem

Because trust is acceptable.

But evidence is better.

Complexity is the Enemy of Innovation – Meet Smithy’s New Python SDK

Code security involves many tools and ecosystems. Smithy cuts through the noise - and with our new Python SDK, building integrations is simpler than ever.

Agentic AI & Vibe Coding in 2025: Security Realities and the Path Forward

Security of Vibe Coding in 2025